KubeGreen with Karpenter

1. Introduction to Kube-Green

Welcome to the technical blog, where we will revisit the very roots of how to master Kube-Green for better efficiency and sustainability. Kube-Green belongs to the ranks of leading forces in the push for greener and more energy-efficient technologies within the IT sector. In this article, we would be discussing the technical subtleties of Kube-Green and how Kube-Green can mark a sea change in attitude towards sustainability in the digital world. Keep watching for deep dives from experts in the art of sharing best practices, tips, and tricks on how to derive maximum benefits from Kube-Green for an organization.

What can really reduce operations costs in a Kubernetes cluster, especially with auto-scaling tools like Karpenter, is efficiency. Karpenter will dynamically provision an EC2 instance to cover the demands of the pod, but it doesn’t inherently manage infrastructure costs at low-usage periods, such as nighttime. This is where scheduled scale-downs are highly valued by Kubegreen, a Kubernetes tool designed for this purpose.

2. Introduction to Karpenter

Karpenter is an open-source AWS Kubernetes cluster auto-scaler that performs dynamic node provisioning and scaling to efficiently meet workload requirements. It will optimize for both cost and performance through intelligent instance type selection, including Spot Instances, and proper resource allocation. Faster node provisioning, cloud-native integration, and minimal configuration make operating dynamic workloads easier with Karpenter: reduces delays and operational complexity of clusters and increases scalability.

3. Karpenter Scale-Up Process

Scale-up in Karpenter is different from other tooling, as it directly provisions nodes using the cloud provider’s API instead of only modifying the desired state of an ASG. Here goes a detailed breakdown of how Karpenter brings up nodes:

Detection of Unscheduled Pods

Karpenter constantly polls the Kubernetes control plane for unscheduled Pods.

When none of the nodes in the cluster has sufficient resources or available capacity to host the Pod according to the requirements of a Pod such as CPU, memory, or GPU, it is considered unscheduled.

Pod Requirement Analysis

Karpenter will check if the resource requests and the constraints he has (such as for example, labels, tolerations, affinity, and anti-affinity rules are defined within Pod’s Manifest).

This would ensure that the newly provisioned node meets the exact requirement of the pending workload.

Instance Selection using Cloud Provider APIs

Karpenter interacts directly with the cloud provider, such as AWS EC2, using its APIs. It dynamically selects the instance type best matching the resource requests of a Pod, considering cluster cost efficiency.

Karpenter MAY opt to choose Spot Instances for cost-cutting or specific instance types with GPUs for running ML workloads. In contrast to other cluster autoscalers, Karpenter doesn’t use ASGs at all, thus making possible a more fine-grained selection of instances.

Dynamic Node Provisioning

Karpenter provisions a new EC2 instance-or more-directly through the cloud provider.

The instance is launched with a configuration that matches the cluster’s needs, such as AMI ID, user data, security groups, and labels for Kubernetes node registration. This approach ensures much quicker scaling and more versatile than changing an ASG’s desired capacity.

Node Registration in Kubernetes

The instance, once running, connects to the Kubernetes control plane. In the new node, Kubelet registers the node as a part of the cluster, and then its resources will be available for scheduling. Kubernetes runs pending Pods on the newly provisioned node.

Efficient use of resources

When possible, Karpenter may also pack multiple workloads onto a single node to optimize utilization. For example, it can consolidate workloads by analyzing the resource requirements of all pending Pods.

Scaling Transparency

This scaling up is transparent to the end-user, and workloads are handled efficiently without manual intervention.

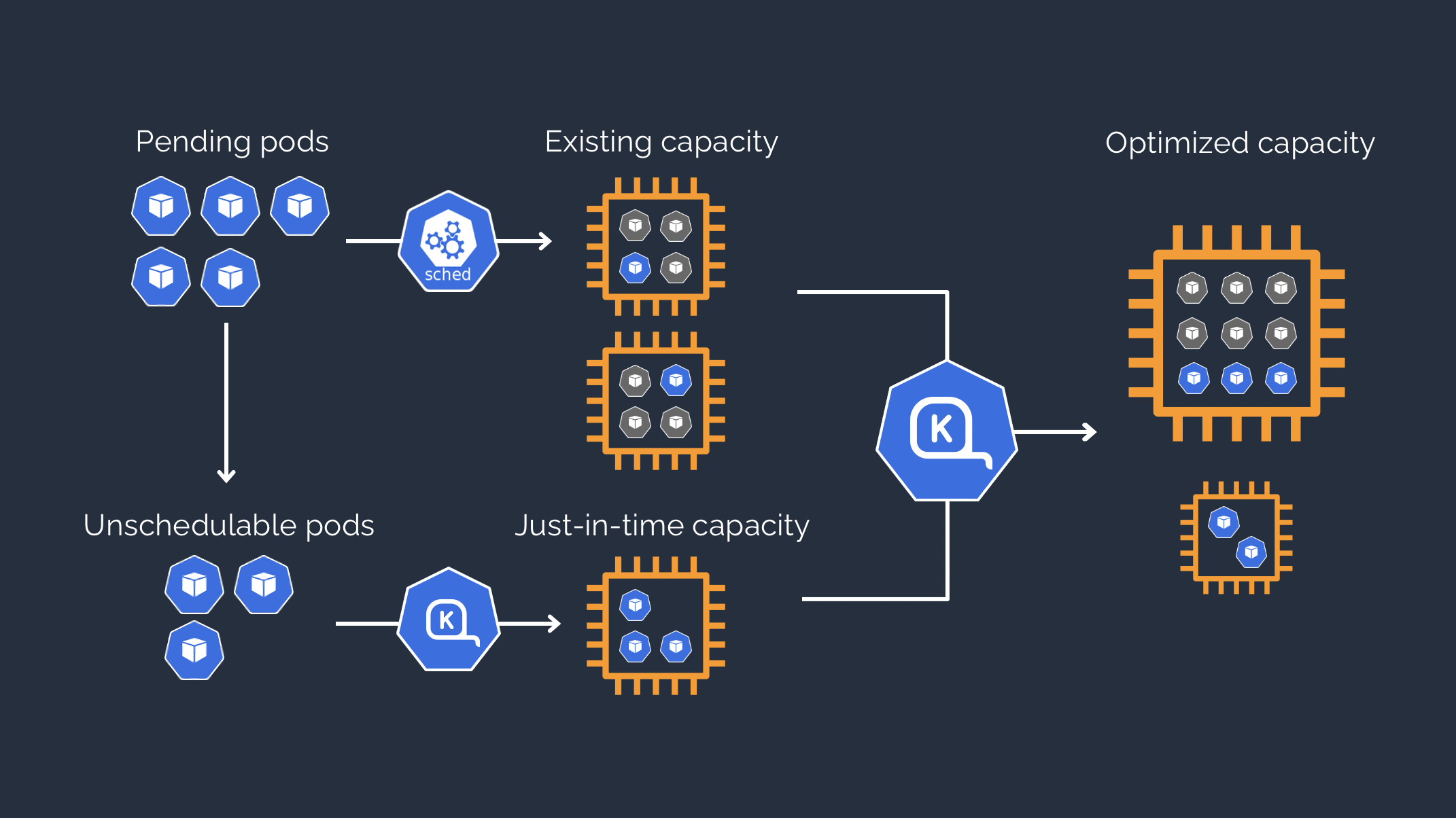

4. Karpenter WorkFlow Diagram

5. Understanding the Challenges

While Karpenter is very good at scaling up and down based on real-world demands, it lacks any proactive scaling down during predictable low-usage windows. This introduces some cost overhead because the EC2 instances remain up when the application workload is at a minimum.

Key Challenges:

Karpenter provisions EC2 instances dynamically but doesn’t manage the idle infrastructure. Applications running overnight without interruption result in the usage of superfluous operation costs. Manual intervention to scale down deployments, on the other hand, is costly and error-prone.

6. Resolution with KubeGreen

Kubegreen provides automation for scaling down the workload during predefined timeframes so that Karpenter decreases the number of nodes he uses. Here’s how it works:

Schedule Scale-Downs: Kubegreen can be configured to scale down certain deployments at night, based on custom schedules set in Kubernetes CronJobs.

Workload Management: Kubegreen sets the replica count of the deployment to zero during off-peak hours.

Node Scaling: On scaling down pods, Karpenter automatically frees unused EC2 instances to save costs.

Scale-Up Automation: Deployments are restored by the Kubegreen restorers with their original replica count at the beginning of the day, and the required nodes are provisioned by the Karpenter.

7. Benefits of Kubegreen

Cost Optimization: Bring about very significant savings in the cloud by ensuring there will not be any idle nodes when the demand is low.

Automation: Replace manual scaling adjustment needs with fully automated scheduling.

Integration: This controller integrates well with Karpenter for managing pod replicas as well as node resources.

Operational Efficiency: Ensures resources are utilized only when needed, freeing up bandwidth for other critical tasks.

9. Implementation of Kube Green

kubectl create ns kube-green helm repo add kube-green https://kube-green.additi.fr/ helm install my-kube-green kube-green/kube-green --version 0.0.13 -n kube-green Sleep Policy apiVersion: kube-green.com/v1alpha1 kind: SleepInfo metadata: name: working-hours namespace: test spec: weekdays: "1-5" sleepAt: "08:00" wakeUpAt: "20:00" timeZone: "Asia/Kolkata" Exceptional Sleep Policy apiVersion: kube-green.com/v1alpha1 kind: SleepInfo metadata: name: working-hours namespace: test spec: weekdays: "1-5" sleepAt: "08:00" wakeUpAt: "20:00" timeZone: "Asia/Kolkata" excludeRef: - apiVersion: "apps/v1" kind: Deployment name: abc

Note: Any deployment specified in a namespace will be excluded from scaling down as needed.

Observation

Kube Green Logs

How Kube Green Works

The sleepinfo object will be created in a name space. Kube Green will scale down the deployment in that perticular namespace and create a new revision. When it scales up the deployment in the declared time it will check the previous revision and restore the replicas number.

10. Conclusion

Kubegreen is a game-changer, especially in dynamic scaling with Karpenter. Kubegreen will automate workload scale-downs during predictable downtimes, which perfectly complements the auto-scaling provided by Karpenter, driving significant cost savings and smoothing operations. If your business aims to cut cloud expenses without sacrificing high service reliability, Kubegreen should be an integral part of your Kubernetes toolkit.