About the customer

Annapurna Finance Pvt. Ltd (AFPL) was established with a purpose of serving the economically weaker sections of the society, by bringing them to mainstream, providing need based financial services at their doorstep. Annapurna finance used to face multiple down time issues before migrating to AWS. Frequent network and infrastructure problems at the provider’s data center have resulted in service inaccessibility for end users, negatively impacting the company’s reputation and revenue. To address these challenges, it has been identified that modernizing both the application(LOS and LMS) and infrastructure is crucial. The existing monolithic architecture of the MSME LOS application hinders performance and scalability. Adopting a microservices architecture would greatly enhance the application’s capabilities, enabling Annapurna to meet the demands of their expanding customer growth across Pan India.

Customer Challenge

- Annapurna was encountering problems with uptime. The services offered were frequently inaccessible to end users due to frequent network and infrastructure problems at the datacenter of the service provider. This was adversely affecting their reputation and causing a decrease in revenue.

- It was noticed that the MSME LOS application relied on a monolithic architecture. However, it was noted that adopting a microservices architecture would significantly enhance the application’s performance and scalability. Therefore, modernizing both the application and infrastructure would enable the organization to keep up with the expanding customer base across Pan India.

The customer did not employ any CICD/DevOps tools to roll out modifications to the current application. Consequently, each deployment required some downtime. As any period of downtime could adversely affect their revenue, customer loyalty, and overall business, they could not afford to take any risks.

They were looking for a partner who would aid in assessing their readiness for Cloud migration, devising a financial justification, determining the associated costs, creating a comprehensive Cloud migration plan, and eventually shifting the applications to the AWS Cloud.

- Monitoring and Observability: The lack of a robust monitoring strategy made it challenging to detect application issues and potential bottlenecks in real-time, hindering proactive remediation.

- Scalability and Deployment Delays: Annapurna Finance faced challenges with the scalability of their applications running on standalone servers, leading to performance issues during peak times. Manual deployment strategies caused delays in releasing new features and updates to the market.

Workmates Core2Cloud Solution Approach

- Workmates wanted to holistically support the customer to meet both their business and technical objectives as part of this program. A detailed discussion was done with Annapurna Finance’s technical and management teams to understand their existing application infrastructure and the pain points associated with them. Inputs were taken from them to understand their expectations from the Workmates Team and the AWS Cloud infrastructure.

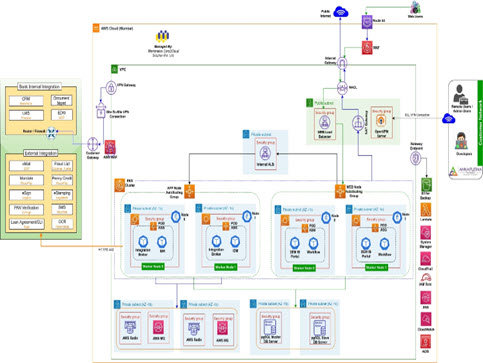

- A VPC was provisioned exclusively for the customer in the Mumbai Region of the AWS Cloud.

- Two separate networks – public (Internet facing) and private (non-Internet-facing), created in the new VPC on the AWS Cloud. SSL vpn was configured for remote connection for the end users.

- Elastic Kubernetes Services (EKS) was provision, deploy and orchestrate the customer’s containerized applications in EKS Cluster Nodes with Auto Scaling Groups (ASG) on the private network. The Pods with the containerized applications will scale-out and scale-in as the number of concurrent client requests go high and low.

- A MySQL Database server made to run on a separate RDS Aurora Instance as the database service is not suitable to be run in a container in an ASG through EKS.

- Cassandra and MongoDB Database Servers was hosted on separate EC2 instances placed outside the EKS cluster.

- The Elastic Search and Kafka Servers was made to run on separate EC2 instances placed outside the EKS cluster.

- External users will access the LMS portal through an Application Load Balancer and an Application Load Balancer provisioned in the public subnet.

- CI/CD pipeline was created to deploy code changes from the code repository using a Jenkins Server.

- AWS enterprise tool CloudWatch was used for monitoring server resources and trigger alarms in case of any service outage and or issues with the server internally.

- AWS S3 with an object-based storage was provisioned as a native backup site to accumulate the data backups daily.

- The servers were configured with the latest updated versions of Windows and Linux Server OS according to customer requirements. The root EBS volumes for all servers were kept only for the OS. An additional EBS volume was used for data related to the applications and databases.

- Periodic patching activities for the servers were configured through AWS SSM Patch Manager.

- The MSME LOS application workload was modernized from a monolithic to a microservices based architecture and application code changes were automated by means of CI/CD pipelines implemented by a Jenkins Server.

Results and Benefits

-

- Scalability: Microservices allow individual components to be scaled independently based on demand. This can result in better resource utilization and cost efficiency.

- Flexibility and Agility: Microservices enable faster development cycles and deployments.

- Improved Fault Isolation: If a specific microservice fails, it doesn’t necessarily affect the entire application. This enhances fault tolerance and system reliability.

- Technology Diversity: Different microservices can use different technologies, programming languages, and frameworks that are best suited for their specific tasks.

- Enhanced Maintenance: Since services are smaller and focused, it’s easier to maintain and update them without affecting the entire application.

- Ease of Deployment: Microservices can be independently deployed, enabling continuous integration and continuous deployment (CI/CD) practices.

- Enhanced Innovation: Teams can innovate and experiment with new features without being constrained by the entire application’s architecture.

- Resource Efficiency: Microservices can be deployed on different infrastructure resources based on their requirements, optimizing resource usage.

- Team Autonomy: Different teams can work on different services, promoting ownership and specialization, which can improve overall development efficiency.

- Better Scalability: Microservices can scale individually, allowing you to allocate resources only to the services that require them, optimizing resource usage.

- Improved Fault Isolation: If a specific microservice experiences a failure, it’s less likely to bring down the entire system, improving overall system resilience.

Security Considerations

- AWS IAM role-based access control to restrict users to only the required resources.

- Deep visibility into API calls are maintained through AWS Cloud Trail, including who, what, and from where calls were made. All user related activities are tracked and logged.

- For any Administrative task Remote user have need to connect to VPN client for accessing the servers. All the RDP/SSH port are bound with OpenVPN server, also default ports will be changed to the custom port.

- The DB ports are accessible only from the Application containers and are restricted using Security Group.

- All the container workloads are under the private subnets, the application are exposed using the Application Load Balancer Ingress. SSL listeners has been setup for ALB and certificate has been issued using AWS ACM.

AWS Services used:

Other Services

SSL VPN, Jenkins, Helm, Prometheus, Grafana, EFK

Solution Architecture